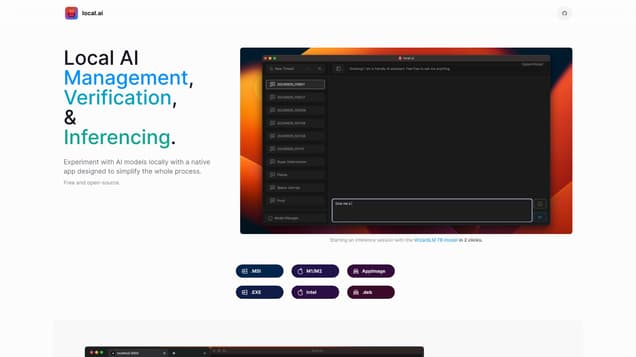

What is local.ai?

The Local AI Playground is an innovative native application that aims to streamline the AI model experimentation process on local devices. By utilizing this app, users can conveniently conduct AI tasks offline and in a secure manner, without relying on a GPU.

Information

- Financing

- $1.30M

- Revenue

- $80.00M

- Language

- English

- Price

- Free

Freework.ai Spotlight

Display Your Achievement: Get Our Custom-Made Badge to Highlight Your Success on Your Website and Attract More Visitors to Your Solution.

Website traffic

- Monthly visits12.82K

- Avg visit duration00:01:21

- Bounce rate70.95%

- Unique users--

- Total pages views17.47K

Access Top 5 countries

Traffic source

local.ai FQA

- What is local.ai used for?

- Is local.ai free?

- What are the available features of local.ai?

- What upcoming features can we expect from local.ai?

- What can you do with local.ai's Model Management feature?

local.ai Use Cases

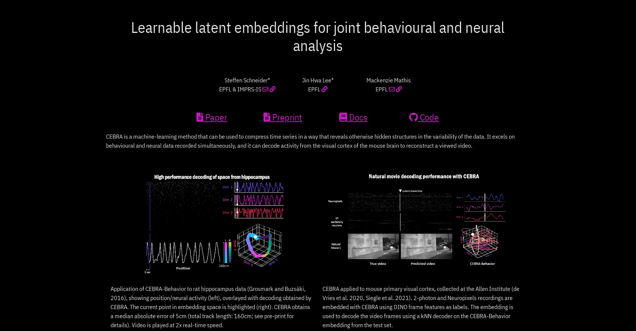

Experiment with AI offline, in private. No GPU required! - A native app made to simplify the whole process.

Starting an inference session with the WizardLM 7B model in 2 clicks.

Power any AI app, offline or online. Here in tandem with window.ai.

Powerful Native App with a Rust backend, local.ai is memory efficient and compact. (<10MB on Mac M2, Windows, and Linux .deb)

Available features: CPU Inferencing, Adapts to available threads, GGML quantization q4, 5.1, 8, f16

Upcoming features: GPU Inferencing, Parallel session

Model Management: Keep track of your AI models in one centralized location. Pick any directory!

Available features: Resumable, concurrent downloader, Usage-based sorting, Directory agnostic

Upcoming features: Nested directory, Custom Sorting and Searching

Digest Verification: Ensure the integrity of downloaded models with a robust BLAKE3 and SHA256 digest compute feature.