What is Prompt Token Counter?

The Token Counter for OpenAI Models is an online tool designed to answer the question, "What is it?" This tool enables users to effortlessly count tokens derived from OpenAI models and prompts. By utilizing this resource, individuals can effectively monitor and adhere to token limits specific to their chosen model, ensuring cost management and optimization.

Information

- Language

- English

- Price

- Free

Freework.ai Spotlight

Display Your Achievement: Get Our Custom-Made Badge to Highlight Your Success on Your Website and Attract More Visitors to Your Solution.

Website traffic

- Monthly visits216

- Avg visit duration--

- Bounce rate50.00%

- Unique users216

- Total pages views374

Access Top 5 countries

Traffic source

Prompt Token Counter FQA

- Why is a token counter important?

- How can I count prompt tokens?

- What is a token?

- What is a prompt?

- How do I manage prompt tokens when working with language models?

Prompt Token Counter Use Cases

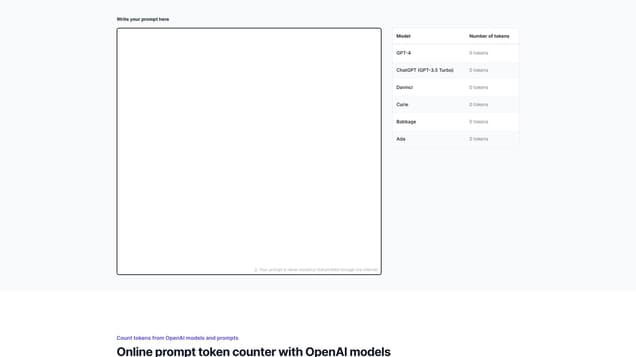

Count tokens from OpenAI models and prompts

Online prompt token counter with OpenAI models

AI Software Development Services

Reach out to us to discuss your AI software development needs. We can help you develop your AI idea into a working prototype or production-ready software.

I want to develop my AI idea

Tracking prompt tokens while using OpenAI models, like GPT-3.5, is essential to ensure you stay within the model's token limits. Token counting is particularly important because the number of tokens in your prompt directly impacts the cost and feasibility of your queries.

To count prompt tokens, you can follow these steps:

Understand token limits: Familiarize yourself with the token limits of the specific OpenAI model you're using. For instance, GPT-3.5-turbo has a maximum limit of 4096 tokens.

Preprocess your prompt: Before sending your prompt to the model, preprocess it using the same techniques you'll use during the actual interaction. Tokenization libraries such as the OpenAI GPT-3 tokenizer can help with this.

Count tokens: Once your prompt is preprocessed, count the number of tokens it contains. Keep in mind that tokens include not only words but also punctuation, spaces, and special characters.