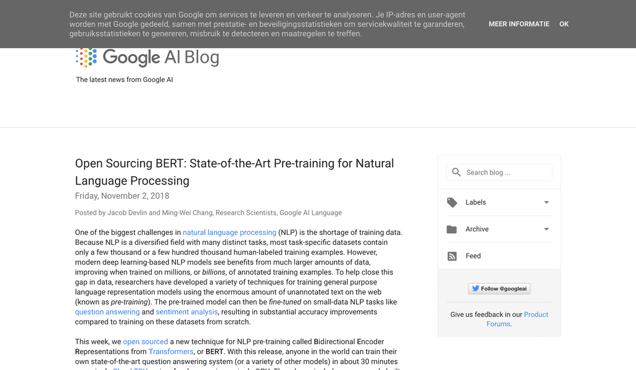

What is Google BERT?

Google BERT, short for Bidirectional Encoder Representations from Transformers, is an open-source natural language processing (NLP) pre-training technique developed by Google. It aims to enhance the precision of sentence comprehension and sentiment analysis in various languages. BERT is highly efficient in processing extensive text collections and extracting meaningful information. By utilizing BERT, users can effortlessly extract topics, identify sentiment, and comprehend emotions from text. Moreover, BERT empowers the creation of intelligent search engines and question answering systems. It serves as an invaluable tool for businesses, developers, and researchers who require swift and accurate text processing. With its advanced pre-training technique, BERT enables users to efficiently derive valuable insights from vast amounts of text.

Information

- Price

- Contact for Pricing

Freework.ai Spotlight

Display Your Achievement: Get Our Custom-Made Badge to Highlight Your Success on Your Website and Attract More Visitors to Your Solution.

Website traffic

- Monthly visits385.00K

- Avg visit duration00:00:30

- Bounce rate74.59%

- Unique users--

- Total pages views609.61K

Access Top 5 countries

Traffic source

Google BERT FQA

- What is the purpose of BERT?

- How does BERT differ from previous models?

- What is the advantage of bidirectionality in BERT?

- What tasks can BERT be fine-tuned for?

- Where can I find the open source code and pre-trained BERT models?

Google BERT Use Cases

BERT can be used for pre-training general purpose language representation models

BERT can be fine-tuned on small-data NLP tasks like question answering and sentiment analysis

BERT achieves state-of-the-art results on 11 NLP tasks, including the Stanford Question Answering Dataset

BERT improves the state-of-the-art accuracy on diverse Natural Language Understanding tasks

BERT models can be fine-tuned on a wide variety of NLP tasks in a few hours or less