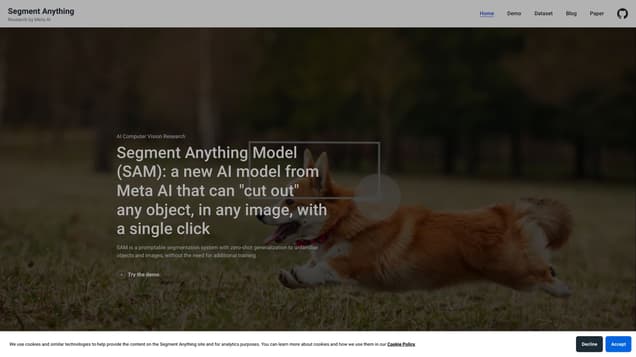

What is Segment Anything?

Segment Anything is a cutting-edge AI platform that utilizes machine learning algorithms and analytics techniques to offer unparalleled data segmentation capabilities. By efficiently breaking down extensive datasets into distinct segments, it empowers users to effortlessly analyze and make informed decisions.

Information

- Revenue

- $144.00M

- Language

- English

- Price

- Contact for Pricing

Freework.ai Spotlight

Display Your Achievement: Get Our Custom-Made Badge to Highlight Your Success on Your Website and Attract More Visitors to Your Solution.

Website traffic

- Monthly visits453.40K

- Avg visit duration00:03:13

- Bounce rate50.18%

- Unique users228.28K

- Total pages views1.20M

Access Top 5 countries

Traffic source

Segment Anything FQA

- What type of prompts are supported?

- What is the structure of the model?

- What platforms does the model use?

- How big is the model?

- How long does inference take?

Segment Anything Use Cases

Segment Anything is an AI model that can 'cut out' any object in an image with a single click.

The model uses prompts specifying what to segment in an image, allowing for a wide range of segmentation tasks without additional training.

It can be prompted with interactive points and boxes, automatically segment everything in an image, and generate multiple valid masks for ambiguous prompts.

SAM's promptable design enables flexible integration with other systems, such as taking input prompts from AR/VR headsets or object detectors.

The output masks generated by SAM can be used as inputs to other AI systems, such as tracking object masks in videos or enabling image editing applications.

SAM has learned a general notion of what objects are, enabling zero-shot generalization to unfamiliar objects and images without requiring additional training.

The model is designed to be efficient and flexible, with a one-time image encoder and a lightweight mask decoder that can run in a web browser in just a few milliseconds per prompt.

SAM supports different types of prompts, including foreground/background points, bounding boxes, and masks.

The model's image encoder is implemented in PyTorch and requires a GPU for efficient inference, while the prompt encoder and mask decoder can run directly with PyTorch or on CPU/GPU platforms that support ONNX runtime.

SAM was trained on the SA-1B dataset, which includes 11 million licensed and privacy-preserving images with over 1.1 billion segmentation masks.